Guardrails

Guardrails refer to the mechanisms and strategies put in place to ensure the models generate appropriate, accurate, and safe content. These guardrails can include predefined rules, regulatory constraints, ethical guidelines, and technical measures such as content filters, training data curation, and real-time moderation. The purpose of these guardrails is to prevent the LLMs from producing harmful, biased, or misleading outputs, thereby protecting users and maintaining the integrity of the applications leveraging these models. Essentially, guardrails help balance the powerful capabilities of LLMs with the necessity for responsible and ethical AI deployment.

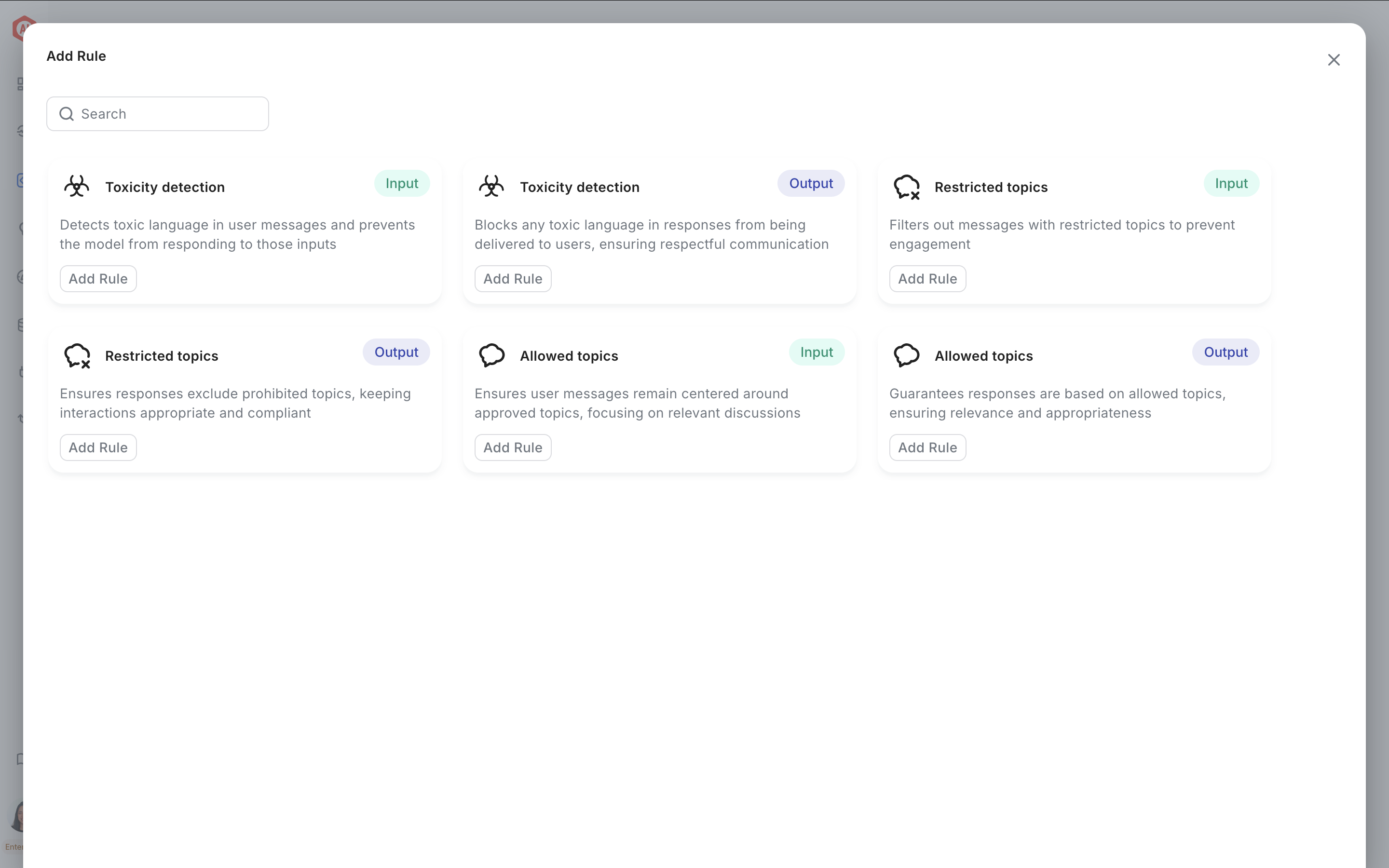

There are 2 types of guardrails:

- Input / Prompt guardrails: These guardrails are designed to evaluate and filter user inputs (or prompts) before they are processed by the system.

- Output guardrails: These guardrails are designed to evaluate and filter the responses generated by the system before they are delivered to the user.

Updated 13 days ago